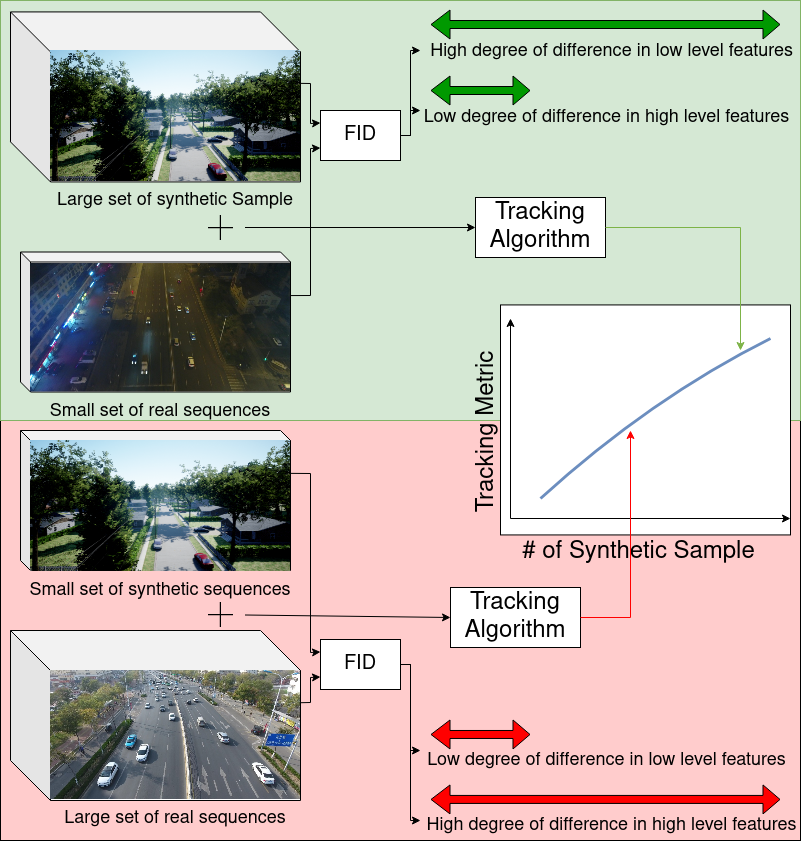

Strategic Incorporation of Synthetic Data for Performance Enhancement in Deep Learning: A Case Study on Object Tracking Tasks

Our paper Strategic Incorporation of Synthetic Data for Performance Enhancement in Deep Learning: A Case Study on Object Tracking Tasks has been published as a conference paper at the 18th International Symposium on Visual Computing (ISVC), 2023. The work is co-authored by Jatin Katyal and Charalambos Poullis.